Crypto-Incentivized NMT Training

ALIA tokens are solely created through two types of work performed by the independent aliaOS compute nodes. Document Translation work originates with customers and is handled directly by aliaOS peer to peer network in repsonse to blockchain protocols. NMT work assignments originate from the ALIA Polyglot stack so it is vital that logging of all work elements happen in a redundant, secure, and asyncronous fashion. All distributed computation assignments must be tracked and confirmation must be returned to the parameter server. These transactional records will be submitted by the aliaOS nodes to the blockchain for validation, consensus and eventual addition to the ALIA blockchain.

The ALIA platform tracks and evaluates the progress NMT Training with real time logging capabilities that are used to audit the progression of calculation tasks as they are sent from the Polyglot stack to the compute nodes. Each calculation task is described as an “operation” and stored in a computational graph. Each operation in a graph is given a unique name in the form of hashed identifier. The PolyGlot Stack’s parameter server is responsible for routing, recording and returning the results for each operation send to nodes for mathematical processing.

A tremendous amount of information is tracked during an NMT training cycle to include networking statistic and hardware performance metrics. Our system has the capability to record operations and results down the smallest unit of calculation performed on a GPU card. It is vital to understand how computational metrics are equated to a single ALIA token. Transparency is required and a unit of measure must be published in advance of every new block added to the chain. Every work stream must be auditable for all participating miners. At the most basic level, mathematical computations solved by worker nodes are based upon the way neural networks process data.

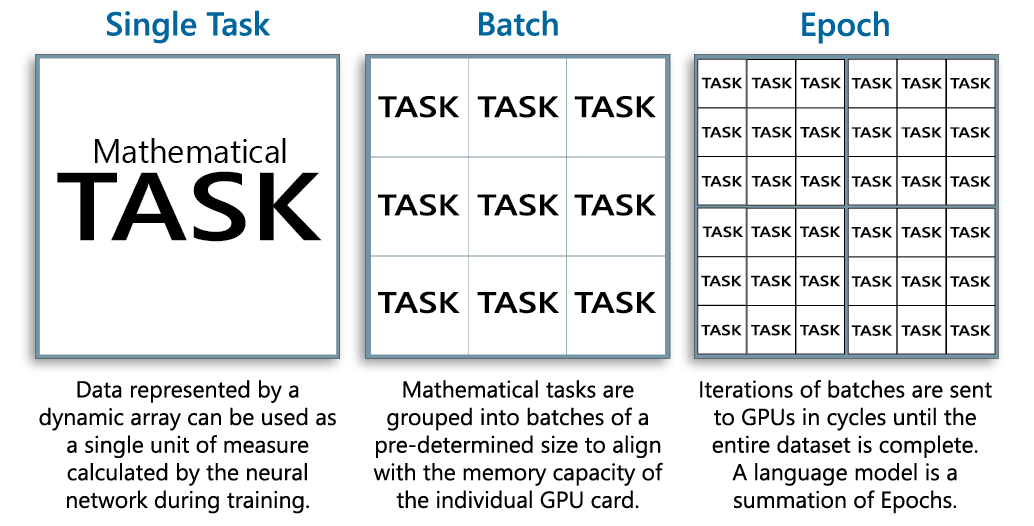

NMT work can be conceptualized as follows:

- A Single Task: is a variable input represented by a dynamic array calculated by mathematical the neural network algorithm during the training process.

- A Batch: Mathematical tasks are grouped into batches of a pre-determined size to align with the memory capacity of the individual GPU card. Batches are distributed across the aliaOS compute nodes for processing in pre-defined iterations.

- An Epoch: Calculated results from iterations of batches are collected until until the a pre-defined number of batchs have been processed.

The summation of batches equals an Epoch. Typically, thirteen epochs are performed and the end result is the Language Translation Model File. The ALIA has a distributed NMT computing topography allowing all the node capability to be embedded in containers. The DCMS ensures nodes get the latest versions and do rolling upgrades between transactions. This model leaves very little room for a dishonest node, as there is no open source to create hybrid builds and there is no easy way to hack into the ecosystem to alter the operations of the distributed network.